In which we dive into the intricacies of data storage in software development.

Those familiar with the series can skip ahead to the next section.

For everyone else: welcome to the second part of the Toronto Fire Fan (TFF) series of posts in which I share the kinds of decisions made by senior software developers and system designers daily.

Check out the intro and part 1 on data extraction.

If you’re a developer looking to advance into systems design and architecture, these posts will give you a taste of some considerations you’ll want to start making.

If you’re not a developer, these posts will give you a behind-the-scenes glimpse into what the developers making your software are up to.

Toronto Fire Fan is a software that plots on a map: active incidents to which Toronto Fire Services is responding in real-time. You can check it out here.

Data Storage

Last time, we got some data from the City of Toronto’s website. Now, we must put the data somewhere. But where, how, and why?

Let’s start with “why.”

In the previous post, I mentioned that the Toronto Fire Fan (TFF) app gets the current list of active incidents from a server, but, sometimes, the data the server returns is malformed, which makes it unusable. One solution is to store the most recent list that’s well-formed so that my website’s visitors always have something to see.

There are two obvious storage options: either store the data in memory (i.e., a cache), or store it on disk (i.e., persistent storage). A cache lets your app save and retrieve data much faster with one of the biggest drawbacks being that if something happens to it, all your data is gone. There’s nuance to all of this beyond the scope of this post. The point is that, as a developer, you will face decisions about how your data is stored.

For the use case I described above, a cache is sufficient. It’s not a big deal if I lose the most recent list of active incidents because TFF can just request the data provider for another list. It’s also OK if the app can’t show anything briefly and temporarily since this isn’t a life-critical or safety-critical app, nor will it cost people millions of dollars for every second of downtime.

The other major benefit of caching the active incidents list is performance. Backend developers, in particular, are obsessed with performance-tuning because the backend design has a huge impact on app performance. It’s all about, “how can I make the app work as fast as possible?”

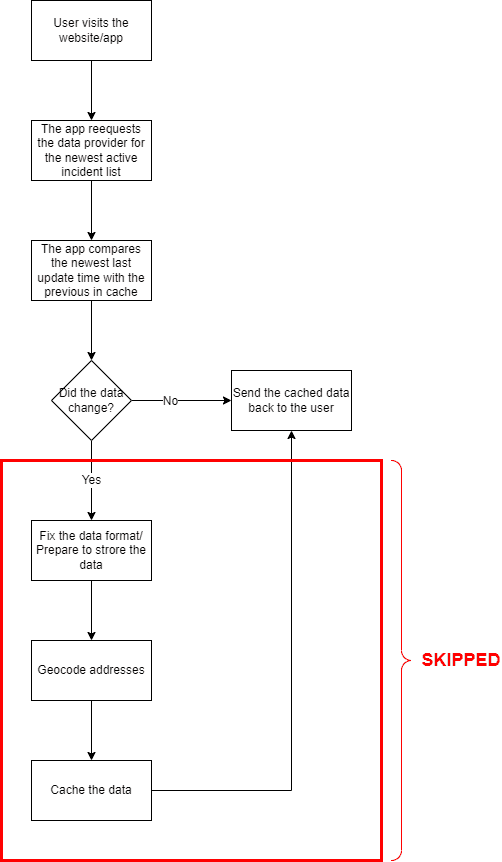

In this case, the data provider attaches a “last update time” to every active incident list that the TFF app receives. So, when someone visits the TFF website/app, the app gets the most recent active incident list from the data provider. Then it compares the newest last update time with the previous one that’s cached. If the times are identical, then the app knows that nothing changed. Then, it sends the cached data back to the user; thereby, skipping the rest of the time-consuming data processing that would, otherwise, take place. The app responds faster.

There is one other use case for the TFF app: historical data analysis. Though not currently active, the app can be configured to collect active incident data for analysis over a longer timeframe (e.g., a month, a year, etc.). In that case, you’re less likely to lose data if you store it on disk; also known as, persistent storage, which usually means: some sort of database.

This is another critical decision point. It’s critical because once the decision is made and the database starts filling up with data, it’s laborious to move that data to a different type of storage. You can change your mind later, but it’ll be expensive. You’ll have to restructure or change your data in some way without losing or corrupting any. That can be hard.

When in doubt, strive for reversible changes. If you’re stuck between two options, select one that’s easier to change later. If you have two options, A and B, and it’s easier to change from A to B later than vice versa, then choose A.

With TFF, I settled on a document-type (i.e., NoSQL) database because it was the simplest option given the type of data I’m storing. By simplest, I mean minimal amount of development work. I receive a JSON object with an array of active incidents, and I can just store it, as-is, in the database. Convenient! If, instead, I chose a relational database, the app would have had to, first, transform the data into something relational before it could be stored. That’d be extra development work. Of course, my approach was also possible because the document database system also features analytical tools to help create graphical reports on the data if or when I decide to build those features.

There’s one more consideration if you’re looking to collect a complete set of data from now, onwards for long-term data analysis. In some systems, a data provider may “push” data into your system. TFF; however, is pulling data. The TFF app needs to request the data from the provider’s server before it receives it. But making those requests can be expensive, and I don’t want to have to make one call per second if I don’t have to.

So, the question is: “how frequently does TFF need to request data to ensure it has a complete set going forward?” According to the City of Toronto’s website, the data is updated once every five minutes on their end. Perfect! That means that the TFF app doesn’t have to make any more than one request every five minutes because any more than that would be wasteful. Now, I don’t know about you, but I don’t want to sit here clicking a button every five minutes for 24 hours a day. So, I set up a scheduler (as you can see in the system diagram) that tells TFF to send that request once per five-minute interval. Easy peasy!

Now that we have the data we need, we’ll need to send it to the frontend where it will be presented in an easily understandable format for TFF website visitors. How is that done and what kinds of decisions do senior software developers make there? Stay tuned for the next post to find out!

If you found this post or this series interesting, or if you know someone who might find this useful, please consider sharing it.

If you’re a developer you can now point your friends and family to this series, and go, “you see! This is what I do!”

As always, leave your questions and comments below. I’m also on Twitter (@JustSomeDev) if you’d like to chat with me there.

Thank you for reading, and I’ll see you in the next one.